|

|

| Line 595: |

Line 595: |

| | <p>(Peirce, CP 3.73).</p> | | <p>(Peirce, CP 3.73).</p> |

| | |} | | |} |

| − |

| |

| − | ===Commentary Note 8.1===

| |

| − |

| |

| − | To my way of thinking, CP 3.73 is one of the most remarkable passages in the history of logic. In this first pass over its deeper contents I won't be able to accord it much more than a superficial dusting off.

| |

| − |

| |

| − | Let us imagine a concrete example that will serve in developing the uses of Peirce's notation. Entertain a discourse whose universe <math>X\!</math> will remind us a little of the cast of characters in Shakespeare's ''Othello''.

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | | <math>X ~=~ \{ \mathrm{Bianca}, \mathrm{Cassio}, \mathrm{Clown}, \mathrm{Desdemona}, \mathrm{Emilia}, \mathrm{Iago}, \mathrm{Othello} \}.</math>

| |

| − | |}

| |

| − |

| |

| − | The universe <math>X\!</math> is “that class of individuals ''about'' which alone the whole discourse is understood to run” but its marking out for special recognition as a universe of discourse in no way rules out the possibility that “discourse may run upon something which is not a subjective part of the universe; for instance, upon the qualities or collections of the individuals it contains” (CP 3.65).

| |

| − |

| |

| − | In order to provide ourselves with the convenience of abbreviated terms, while preserving Peirce's conventions about capitalization, we may use the alternate names <math>^{\backprime\backprime}\mathrm{u}^{\prime\prime}</math> for the universe <math>X\!</math> and <math>^{\backprime\backprime}\mathrm{Jeste}^{\prime\prime}</math> for the character <math>\mathrm{Clown}.~\!</math> This permits the above description of the universe of discourse to be rewritten in the following fashion:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | | <math>\mathrm{u} ~=~ \{ \mathrm{B}, \mathrm{C}, \mathrm{D}, \mathrm{E}, \mathrm{I}, \mathrm{J}, \mathrm{O} \}</math>

| |

| − | |}

| |

| − |

| |

| − | This specification of the universe of discourse could be summed up in Peirce's notation by the following equation:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{15}{c}}

| |

| − | \mathbf{1}

| |

| − | & = & \mathrm{B}

| |

| − | & +\!\!, & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Within this discussion, then, the ''individual terms'' are as follows:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | ^{\backprime\backprime}\mathrm{B}^{\prime\prime}, &

| |

| − | ^{\backprime\backprime}\mathrm{C}^{\prime\prime}, &

| |

| − | ^{\backprime\backprime}\mathrm{D}^{\prime\prime}, &

| |

| − | ^{\backprime\backprime}\mathrm{E}^{\prime\prime}, &

| |

| − | ^{\backprime\backprime}\mathrm{I}^{\prime\prime}, &

| |

| − | ^{\backprime\backprime}\mathrm{J}^{\prime\prime}, &

| |

| − | ^{\backprime\backprime}\mathrm{O}^{\prime\prime}

| |

| − | \end{matrix}</math>

| |

| − | |}

| |

| − |

| |

| − | Each of these terms denotes in a singular fashion the corresponding individual in <math>X.\!</math>

| |

| − |

| |

| − | By way of ''general terms'' in this discussion, we may begin with the following set.

| |

| − |

| |

| − | ===Commentary Note 8.2===

| |

| − |

| |

| − | I continue with my commentary on CP 3.73, developing the ''Othello'' example as a way of illustrating Peirce's concepts.

| |

| − |

| |

| − | In the development of the story so far, we have a universe of discourse that can be characterized by means of the following system of equations:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{15}{c}}

| |

| − | \mathbf{1}

| |

| − | & = & \mathrm{B}

| |

| − | & +\!\!, & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{b}

| |

| − | & = & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{m}

| |

| − | & = & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{w}

| |

| − | & = & \mathrm{B}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | This much provides a basis for collection of absolute terms that I plan to use in this example. Let us now consider how we might represent a sufficiently exemplary collection of relative terms.

| |

| − |

| |

| − | Consider the genesis of relative terms, for example:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{l}

| |

| − | ^{\backprime\backprime}\, \text{lover of}\, \underline{~~ ~~}\, ^{\prime\prime}

| |

| − | \\[6pt]

| |

| − | ^{\backprime\backprime}\, \text{betrayer to}\, \underline{~~ ~~}\, \text{of}\, \underline{~~ ~~}\, ^{\prime\prime}

| |

| − | \\[6pt]

| |

| − | ^{\backprime\backprime}\, \text{winner over of}\, \underline{~~ ~~}\, \text{to}\, \underline{~~ ~~}\, \text{from}\, \underline{~~ ~~}\, ^{\prime\prime}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | We may regard these fill-in-the-blank forms as being derived by a kind of ''rhematic abstraction'' from the corresponding instances of absolute terms.

| |

| − |

| |

| − | In other words:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <p>The relative term <math>^{\backprime\backprime}\, \text{lover of}\, \underline{~~ ~~}\, ^{\prime\prime}</math></p>

| |

| − |

| |

| − | <p>can be reached by removing the absolute term <math>^{\backprime\backprime}\, \text{Emilia}\, ^{\prime\prime}</math></p>

| |

| − |

| |

| − | <p>from the absolute term <math>^{\backprime\backprime}\, \text{lover of Emilia}\, ^{\prime\prime}.</math></p>

| |

| − |

| |

| − | <p><math>\text{Iago}</math> is a lover of <math>\text{Emilia},</math> so the relate-correlate pair <math>\mathrm{I}:\mathrm{E}</math></p>

| |

| − |

| |

| − | <p>lies in the 2-adic relation associated with the relative term <math>^{\backprime\backprime}\, \text{lover of}\, \underline{~~ ~~}\, ^{\prime\prime}.</math></p>

| |

| − | |-

| |

| − | |

| |

| − | <p>The relative term <math>^{\backprime\backprime}\, \text{betrayer to}\, \underline{~~ ~~}\, \text{of}\, \underline{~~ ~~}\, ^{\prime\prime}</math></p>

| |

| − |

| |

| − | <p>can be reached by removing the absolute terms <math>^{\backprime\backprime}\, \text{Othello}\, ^{\prime\prime}</math> and <math>^{\backprime\backprime}\, \text{Desdemona}\, ^{\prime\prime}</math></p>

| |

| − |

| |

| − | <p>from the absolute term <math>^{\backprime\backprime}\, \text{betrayer to Othello of Desdemona}\, ^{\prime\prime}.</math></p>

| |

| − |

| |

| − | <p><math>\text{Iago}</math> is a betrayer to <math>\text{Othello}</math> of <math>\text{Desdemona},</math> so the relate-correlate-correlate triple <math>\mathrm{I}:\mathrm{O}:\mathrm{D}</math></p>

| |

| − |

| |

| − | <p>lies in the 3-adic relation assciated with the relative term <math>^{\backprime\backprime}\, \text{betrayer to}\, \underline{~~ ~~}\, \text{of}\, \underline{~~ ~~}\, ^{\prime\prime}.\!</math></p>

| |

| − | |-

| |

| − | |

| |

| − | <p>The relative term <math>^{\backprime\backprime}\, \text{winner over of}\, \underline{~~ ~~}\, \text{to}\, \underline{~~ ~~}\, \text{from}\, \underline{~~ ~~}\, ^{\prime\prime}</math></p>

| |

| − |

| |

| − | <p>can be reached by removing the absolute terms <math>^{\backprime\backprime}\, \text{Othello}\, ^{\prime\prime},</math> <math>^{\backprime\backprime}\, \text{Iago}\, ^{\prime\prime},</math> and <math>^{\backprime\backprime}\, \text{Cassio}\, ^{\prime\prime}</math></p>

| |

| − |

| |

| − | <p>from the absolute term <math>^{\backprime\backprime}\, \text{winner over of Othello to Iago from Cassio}\, ^{\prime\prime}.</math></p>

| |

| − |

| |

| − | <p><math>\text{Iago}</math> is a winner over of <math>\text{Othello}</math> to <math>\text{Iago}</math> from <math>\text{Cassio},\!</math> so the elementary relative term <math>\mathrm{I}:\mathrm{O}:\mathrm{I}:\mathrm{C}</math></p>

| |

| − |

| |

| − | <p>lies in the 4-adic relation associated with the relative term <math>^{\backprime\backprime}\, \text{winner over of}\, \underline{~~ ~~}\, \text{to}\, \underline{~~ ~~}\, \text{from}\, \underline{~~ ~~}\, ^{\prime\prime}.</math></p>

| |

| − | |}

| |

| − |

| |

| − | ===Commentary Note 8.3===

| |

| − |

| |

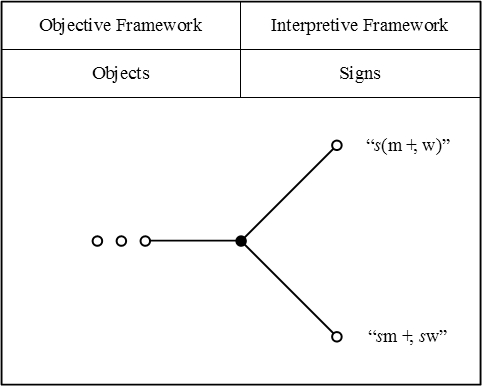

| − | Speaking very strictly, we need to be careful to distinguish a ''relation'' from a ''relative term''.

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <p>The relation is an ''object'' of thought that may be regarded ''in extension'' as a set of ordered tuples that are known as its ''elementary relations''.</p>

| |

| − | |-

| |

| − | |

| |

| − | <p>The relative term is a ''sign'' that denotes certain objects, called its ''relates'', as these are determined in relation to certain other objects, called its ''correlates''. Under most circumstances, one may also regard the relative term as denoting the corresponding relation.</p>

| |

| − | |}

| |

| − |

| |

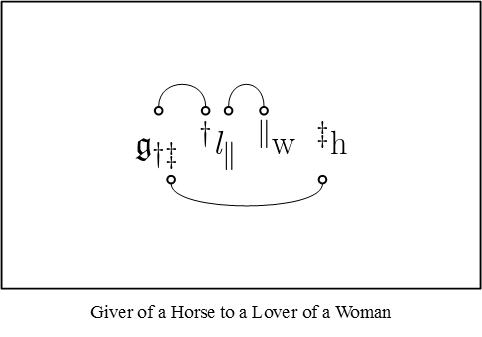

| − | Returning to the Othello example, let us take up the 2-adic relatives <math>^{\backprime\backprime}\, \text{lover of}\, \underline{~~ ~~}\, ^{\prime\prime}</math> and <math>^{\backprime\backprime}\, \text{servant of}\, \underline{~~ ~~}\, ^{\prime\prime}.</math>

| |

| − |

| |

| − | Ignoring the many splendored nuances appurtenant to the idea of love, we may regard the relative term <math>\mathit{l}\!</math> for <math>^{\backprime\backprime}\, \text{lover of}\, \underline{~~ ~~}\, ^{\prime\prime}</math> to be given by the following equation:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{13}{c}}

| |

| − | \mathit{l}

| |

| − | & = &

| |

| − | \mathrm{B}:\mathrm{C}

| |

| − | & +\!\!, &

| |

| − | \mathrm{C}:\mathrm{B}

| |

| − | & +\!\!, &

| |

| − | \mathrm{D}:\mathrm{O}

| |

| − | & +\!\!, &

| |

| − | \mathrm{E}:\mathrm{I}

| |

| − | & +\!\!, &

| |

| − | \mathrm{I}:\mathrm{E}

| |

| − | & +\!\!, &

| |

| − | \mathrm{O}:\mathrm{D}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | If for no better reason than to make the example more interesting, let us put aside all distinctions of rank and fealty, collapsing the motley crews of attendant, servant, subordinate, and so on, under the heading of a single service, denoted by the relative term <math>\mathit{s}\!</math> for <math>^{\backprime\backprime}\, \text{servant of}\, \underline{~~ ~~}\, ^{\prime\prime}.</math> The terms of this service are:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{11}{c}}

| |

| − | \mathit{s}

| |

| − | & = &

| |

| − | \mathrm{C}:\mathrm{O}

| |

| − | & +\!\!, &

| |

| − | \mathrm{E}:\mathrm{D}

| |

| − | & +\!\!, &

| |

| − | \mathrm{I}:\mathrm{O}

| |

| − | & +\!\!, &

| |

| − | \mathrm{J}:\mathrm{D}

| |

| − | & +\!\!, &

| |

| − | \mathrm{J}:\mathrm{O}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | The term <math>\mathrm{I}:\mathrm{C}\!</math> may also be implied, but, since it is so hotly arguable, I will leave it out of the toll.

| |

| − |

| |

| − | One more thing that we need to be duly wary about: There are many different conventions in the field as to the ordering of terms in their applications, and it happens that different conventions will be more convenient under different circumstances, so there does not appear to be much of a chance that any one of them can be canonized once and for all.

| |

| − |

| |

| − | In the current reading, we are applying relative terms from right to left, and so our conception of relative multiplication, or relational composition, will need to be adjusted accordingly.

| |

| − |

| |

| − | ===Commentary Note 8.4===

| |

| − |

| |

| − | To familiarize ourselves with the forms of calculation that are available in Peirce's notation, let us compute a few of the simplest products that we find at hand in the Othello case.

| |

| − |

| |

| − | Here are the absolute terms:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{15}{c}}

| |

| − | \mathbf{1}

| |

| − | & = & \mathrm{B}

| |

| − | & +\!\!, & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{b}

| |

| − | & = & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{m}

| |

| − | & = & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{w}

| |

| − | & = & \mathrm{B}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | \end{array}\!</math>

| |

| − | |}

| |

| − |

| |

| − | Here are the 2-adic relative terms:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{13}{c}}

| |

| − | \mathit{l}

| |

| − | & = & \mathrm{B}:\mathrm{C}

| |

| − | & +\!\!, & \mathrm{C}:\mathrm{B}

| |

| − | & +\!\!, & \mathrm{D}:\mathrm{O}

| |

| − | & +\!\!, & \mathrm{E}:\mathrm{I}

| |

| − | & +\!\!, & \mathrm{I}:\mathrm{E}

| |

| − | & +\!\!, & \mathrm{O}:\mathrm{D}

| |

| − | \\[6pt]

| |

| − | \mathit{s}

| |

| − | & = & \mathrm{C}:\mathrm{O}

| |

| − | & +\!\!, & \mathrm{E}:\mathrm{D}

| |

| − | & +\!\!, & \mathrm{I}:\mathrm{O}

| |

| − | & +\!\!, & \mathrm{J}:\mathrm{D}

| |

| − | & +\!\!, & \mathrm{J}:\mathrm{O}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Here are a few of the simplest products among these terms:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{l}\mathbf{1}

| |

| − | & = &

| |

| − | \text{lover of anything}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{B}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{C}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{E} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{D})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{B} ~+\!\!,~ \mathrm{C} ~+\!\!,~ \mathrm{D} ~+\!\!,~ \mathrm{E} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{J} ~+\!\!,~ \mathrm{O})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{B} ~+\!\!,~ \mathrm{C} ~+\!\!,~ \mathrm{D} ~+\!\!,~ \mathrm{E} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{O}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \text{anything except}~\mathrm{J}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{l}\mathrm{b}

| |

| − | & = &

| |

| − | \text{lover of a black}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{B}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{C}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{E} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{D})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | \mathrm{O}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{D}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{l}\mathrm{m}

| |

| − | & = &

| |

| − | \text{lover of a man}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{B}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{C}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{E} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{D})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{C} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{J} ~+\!\!,~ \mathrm{O})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{B} ~+\!\!,~ \mathrm{D} ~+\!\!,~ \mathrm{E}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{l}\mathrm{w}

| |

| − | & = &

| |

| − | \text{lover of a woman}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{B}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{C}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{E} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{D})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{B} ~+\!\!,~ \mathrm{D} ~+\!\!,~ \mathrm{E})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{C} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{O}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{s}\mathbf{1}

| |

| − | & = &

| |

| − | \text{servant of anything}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{C}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{O})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{B} ~+\!\!,~ \mathrm{C} ~+\!\!,~ \mathrm{D} ~+\!\!,~ \mathrm{E} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{J} ~+\!\!,~ \mathrm{O})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{C} ~+\!\!,~ \mathrm{E} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{J}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{s}\mathrm{b}

| |

| − | & = &

| |

| − | \text{servant of a black}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{C}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{O})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | \mathrm{O}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{C} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{J}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{s}\mathrm{m}

| |

| − | & = &

| |

| − | \text{servant of a man}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{C}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{O})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{C} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{J} ~+\!\!,~ \mathrm{O})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{C} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{J}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{s}\mathrm{w}

| |

| − | & = &

| |

| − | \text{servant of a woman}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{C}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{O})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{B} ~+\!\!,~ \mathrm{D} ~+\!\!,~ \mathrm{E})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{E} ~+\!\!,~ \mathrm{J}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{l}\mathit{s}

| |

| − | & = &

| |

| − | \text{lover of a servant of}\, \underline{~~ ~~}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{B}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{C}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{E} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{D})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{C}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{O})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{B}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{D}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathit{s}\mathit{l}

| |

| − | & = &

| |

| − | \text{servant of a lover of}\, \underline{~~ ~~}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{C}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{O})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{B}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{C}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{E} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{D})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{C}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{O} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{O}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Among other things, one observes that the relative terms <math>\mathit{l}\!</math> and <math>\mathit{s}\!</math> do not commute, that is, <math>\mathit{l}\mathit{s}\!</math> is not equal to <math>\mathit{s}\mathit{l}.~\!</math>

| |

| − |

| |

| − | ===Commentary Note 8.5===

| |

| − |

| |

| − | Since multiplication by a 2-adic relative term is a logical analogue of matrix multiplication in linear algebra, all of the products that we computed above can be represented in terms of logical matrices and logical vectors.

| |

| − |

| |

| − | Here are the absolute terms again, followed by their representation as ''coefficient tuples'', otherwise thought of as ''coordinate vectors''.

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{ccrcccccccccccl}

| |

| − | \mathbf{1}

| |

| − | & = & \mathrm{B}

| |

| − | & +\!\!, & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[10pt]

| |

| − | & = & (1 & , & 1 & , & 1 & , & 1 & , & 1 & , & 1 & , & 1)

| |

| − | \\[20pt]

| |

| − | \mathrm{b}

| |

| − | & = &

| |

| − | & &

| |

| − | & &

| |

| − | & &

| |

| − | & &

| |

| − | & &

| |

| − | & &

| |

| − | \mathrm{O}

| |

| − | \\[10pt]

| |

| − | & = & (0 & , & 0 & , & 0 & , & 0 & , & 0 & , & 0 & , & 1)

| |

| − | \\[20pt]

| |

| − | \mathrm{m}

| |

| − | & = &

| |

| − | & & \mathrm{C}

| |

| − | & &

| |

| − | & &

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[10pt]

| |

| − | & = & (0 & , & 1 & , & 0 & , & 0 & , & 1 & , & 1 & , & 1)

| |

| − | \\[20pt]

| |

| − | \mathrm{w}

| |

| − | & = & \mathrm{B}

| |

| − | & &

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | & &

| |

| − | & &

| |

| − | & &

| |

| − | \\[10pt]

| |

| − | & = & (1 & , & 0 & , & 1 & , & 1 & , & 0 & , & 0 & , & 0)

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Since we are going to be regarding these tuples as ''column vectors'', it is convenient to arrange them into a table of the following form:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{c|cccc}

| |

| − | \text{ } & \mathbf{1} & \mathrm{b} & \mathrm{m} & \mathrm{w}

| |

| − | \\

| |

| − | \text{---} & \text{---} & \text{---} & \text{---} & \text{---}

| |

| − | \\

| |

| − | \mathrm{B} & 1 & 0 & 0 & 1

| |

| − | \\

| |

| − | \mathrm{C} & 1 & 0 & 1 & 0

| |

| − | \\

| |

| − | \mathrm{D} & 1 & 0 & 0 & 1

| |

| − | \\

| |

| − | \mathrm{E} & 1 & 0 & 0 & 1

| |

| − | \\

| |

| − | \mathrm{I} & 1 & 0 & 1 & 0

| |

| − | \\

| |

| − | \mathrm{J} & 1 & 0 & 1 & 0

| |

| − | \\

| |

| − | \mathrm{O} & 1 & 1 & 1 & 0

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Here are the 2-adic relative terms again, followed by their representation as coefficient matrices, in this case bordered by row and column labels to remind us what the coefficient values are meant to signify.

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{13}{c}}

| |

| − | \mathit{l}

| |

| − | & = & \mathrm{B}:\mathrm{C}

| |

| − | & +\!\!, & \mathrm{C}:\mathrm{B}

| |

| − | & +\!\!, & \mathrm{D}:\mathrm{O}

| |

| − | & +\!\!, & \mathrm{E}:\mathrm{I}

| |

| − | & +\!\!, & \mathrm{I}:\mathrm{E}

| |

| − | & +\!\!, & \mathrm{O}:\mathrm{D}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{c|ccccccc}

| |

| − | \mathit{l} &

| |

| − | \mathrm{B} &

| |

| − | \mathrm{C} &

| |

| − | \mathrm{D} &

| |

| − | \mathrm{E} &

| |

| − | \mathrm{I} &

| |

| − | \mathrm{J} &

| |

| − | \mathrm{O}

| |

| − | \\

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---}

| |

| − | \\

| |

| − | \mathrm{B} & 0 & 1 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | \mathrm{C} & 1 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | \mathrm{D} & 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | \mathrm{E} & 0 & 0 & 0 & 0 & 1 & 0 & 0

| |

| − | \\

| |

| − | \mathrm{I} & 0 & 0 & 0 & 1 & 0 & 0 & 0

| |

| − | \\

| |

| − | \mathrm{J} & 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | \mathrm{O} & 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{13}{c}}

| |

| − | \mathit{s}

| |

| − | & = & \mathrm{C}:\mathrm{O}

| |

| − | & +\!\!, & \mathrm{E}:\mathrm{D}

| |

| − | & +\!\!, & \mathrm{I}:\mathrm{O}

| |

| − | & +\!\!, & \mathrm{J}:\mathrm{D}

| |

| − | & +\!\!, & \mathrm{J}:\mathrm{O}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{c|ccccccc}

| |

| − | \mathit{s} &

| |

| − | \mathrm{B} &

| |

| − | \mathrm{C} &

| |

| − | \mathrm{D} &

| |

| − | \mathrm{E} &

| |

| − | \mathrm{I} &

| |

| − | \mathrm{J} &

| |

| − | \mathrm{O}

| |

| − | \\

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---} &

| |

| − | \text{---}

| |

| − | \\

| |

| − | \mathrm{B} & 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | \mathrm{C} & 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | \mathrm{D} & 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | \mathrm{E} & 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | \mathrm{I} & 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | \mathrm{J} & 0 & 0 & 1 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | \mathrm{O} & 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Here are the matrix representations of the products that we calculated before:

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{l}\mathbf{1} & = & \text{lover of anything} & =

| |

| − | \end{matrix}</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 1 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 1 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 1 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 1 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 1 \\ 1 \\ 1 \\ 1 \\ 1 \\ 1 \\ 1

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 1 \\ 1 \\ 1 \\ 1 \\ 1 \\ 0 \\ 1

| |

| − | \end{bmatrix}

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{l}\mathrm{b} & = & \text{lover of a black} & =

| |

| − | \end{matrix}</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 1 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 1 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 1 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 1 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 1

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 0 \\ 1 \\ 0 \\ 0 \\ 0 \\ 0

| |

| − | \end{bmatrix}

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{l}\mathrm{m} & = & \text{lover of a man} & =

| |

| − | \end{matrix}</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 1 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 1 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 1 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 1 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 1 \\ 0 \\ 0 \\ 1 \\ 1 \\ 1

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 1 \\ 0 \\ 1 \\ 1 \\ 0 \\ 0 \\ 0

| |

| − | \end{bmatrix}

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{l}\mathrm{w} & = & \text{lover of a woman} & =

| |

| − | \end{matrix}</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 1 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 1 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 1 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 1 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 1 \\ 0 \\ 1 \\ 1 \\ 0 \\ 0 \\ 0

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 1 \\ 0 \\ 0 \\ 1 \\ 0 \\ 1

| |

| − | \end{bmatrix}\!

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{s}\mathbf{1} & = & \text{servant of anything} & =

| |

| − | \end{matrix}</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 1 \\ 1 \\ 1 \\ 1 \\ 1 \\ 1 \\ 1

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 1 \\ 0 \\ 1 \\ 1 \\ 1 \\ 0

| |

| − | \end{bmatrix}

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{s}\mathrm{b} & = & \text{servant of a black} & =

| |

| − | \end{matrix}</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 0 \\ 1

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 1 \\ 0 \\ 0 \\ 1 \\ 1 \\ 0

| |

| − | \end{bmatrix}

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{s}\mathrm{m} & = & \text{servant of a man} & =

| |

| − | \end{matrix}</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 1 \\ 0 \\ 0 \\ 1 \\ 1 \\ 1

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 1 \\ 0 \\ 0 \\ 1 \\ 1 \\ 0

| |

| − | \end{bmatrix}

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{s}\mathrm{w} & = & \text{servant of a woman} & =

| |

| − | \end{matrix}\!</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 1 \\ 0 \\ 1 \\ 1 \\ 0 \\ 0 \\ 0

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 0 \\ 0 \\ 0 \\ 1 \\ 0 \\ 1 \\ 0

| |

| − | \end{bmatrix}

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{l}\mathit{s} & = & \text{lover of a servant of ---} & =

| |

| − | \end{matrix}</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 1 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 1 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 1 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 1 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellpadding="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{matrix}

| |

| − | \mathit{s}\mathit{l} & = & \text{servant of a lover of ---} & =

| |

| − | \end{matrix}</math>

| |

| − | |-

| |

| − | |

| |

| − | <math>

| |

| − | \begin{bmatrix}

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | \begin{bmatrix}

| |

| − | 0 & 1 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 1 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 1 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 1 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | =

| |

| − | \begin{bmatrix}

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 0

| |

| − | \\

| |

| − | 0 & 0 & 1 & 0 & 0 & 0 & 1

| |

| − | \\

| |

| − | 0 & 0 & 0 & 0 & 0 & 0 & 0

| |

| − | \end{bmatrix}

| |

| − | </math>

| |

| − | |}

| |

| − |

| |

| − | ===Commentary Note 8.6===

| |

| − |

| |

| − | The foregoing has hopefully filled in enough background that we can begin to make sense of the more mysterious parts of CP 3.73.

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%" <!--QUOTE-->

| |

| − | |

| |

| − | <p>Thus far, we have considered the multiplication of relative terms only. Since our conception of multiplication is the application of a relation, we can only multiply absolute terms by considering them as relatives.</p>

| |

| − |

| |

| − | <p>Now the absolute term “man” is really exactly equivalent to the relative term “man that is ——”, and so with any other. I shall write a comma after any absolute term to show that it is so regarded as a relative term.</p>

| |

| − |

| |

| − | <p>Then “man that is black” will be written:</p>

| |

| − | |-

| |

| − | | align="center" | <math>\mathrm{m},\!\mathrm{b}</math>

| |

| − | |-

| |

| − | |

| |

| − | <p>(Peirce, CP 3.73).</p>

| |

| − | |}

| |

| − |

| |

| − | In any system where elements are organized according to types, there tend to be any number of ways in which elements of one type are naturally associated with elements of another type. If the association is anything like a logical equivalence, but with the first type being lower and the second type being higher in some sense, then one may speak of a ''semantic ascent'' from the lower to the higher type.

| |

| − |

| |

| − | For example, it is common in mathematics to associate an element <math>a\!</math> of a set <math>A\!</math> with the constant function <math>f_a : X \to A</math> that has <math>f_a (x) = a\!</math> for all <math>x\!</math> in <math>X,\!</math> where <math>X\!</math> is an arbitrary set. Indeed, the correspondence is so close that one often uses the same name <math>{}^{\backprime\backprime} a {}^{\prime\prime}</math> to denote both the element <math>a\!</math> in <math>A\!</math> and the function <math>a = f_a : X \to A,</math> relying on the context or an explicit type indication to tell them apart.

| |

| − |

| |

| − | For another example, we have the ''tacit extension'' of a <math>k\!</math>-place relation <math>L \subseteq X_1 \times \ldots \times X_k\!</math> to a <math>(k+1)\!</math>-place relation <math>L^\prime \subseteq X_1 \times \ldots \times X_{k+1}\!</math> that we get by letting <math>L^\prime = L \times X_{k+1},</math> that is, by maintaining the constraints of <math>L\!</math> on the first <math>k\!</math> variables and letting the last variable wander freely.

| |

| − |

| |

| − | What we have here, if I understand Peirce correctly, is another such type of natural extension, sometimes called the ''diagonal extension''. This extension associates a <math>k\!</math>-adic relative or a <math>k\!</math>-adic relation, counting the absolute term and the set whose elements it denotes as the cases for <math>k = 0,\!</math> with a series of relatives and relations of higher adicities.

| |

| − |

| |

| − | A few examples will suffice to anchor these ideas.

| |

| − |

| |

| − | Absolute terms:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{11}{c}}

| |

| − | \mathrm{m}

| |

| − | & = & \text{man}

| |

| − | & = & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{n}

| |

| − | & = & \text{noble}

| |

| − | & = & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{w}

| |

| − | & = & \text{woman}

| |

| − | & = & \mathrm{B}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Diagonal extensions:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{11}{c}}

| |

| − | \mathrm{m,}

| |

| − | & = & \text{man that is}\, \underline{~~ ~~}

| |

| − | & = & \mathrm{C}:\mathrm{C}

| |

| − | & +\!\!, & \mathrm{I}:\mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}:\mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}:\mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{n,}

| |

| − | & = & \text{noble that is}\, \underline{~~ ~~}

| |

| − | & = & \mathrm{C}:\mathrm{C}

| |

| − | & +\!\!, & \mathrm{D}:\mathrm{D}

| |

| − | & +\!\!, & \mathrm{O}:\mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{w,}

| |

| − | & = & \text{woman that is}\, \underline{~~ ~~}

| |

| − | & = & \mathrm{B}:\mathrm{B}

| |

| − | & +\!\!, & \mathrm{D}:\mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}:\mathrm{E}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Sample products:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathrm{m},\!\mathrm{n}

| |

| − | & = &

| |

| − | \text{man that is noble}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{C}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{J} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{O})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{C} ~+\!\!,~ \mathrm{D} ~+\!\!,~ \mathrm{O})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{C} ~+\!\!,~ \mathrm{O}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathrm{n},\!\mathrm{m}

| |

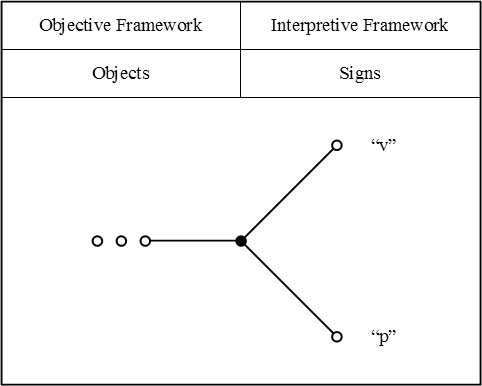

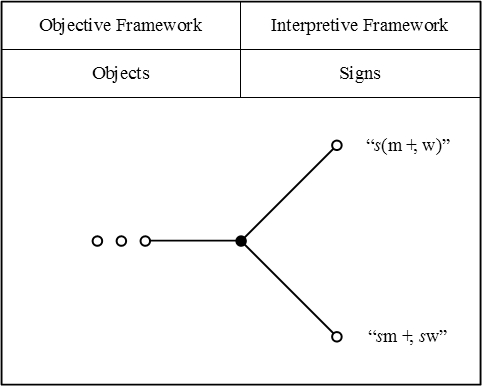

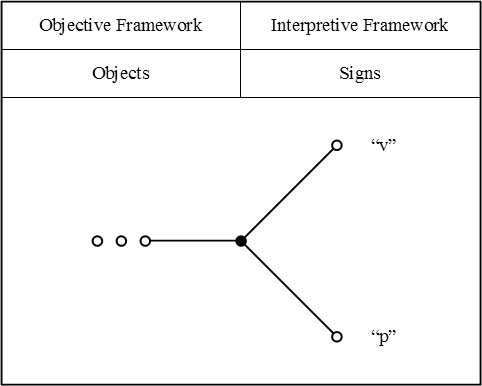

| − | & = &

| |

| − | \text{noble that is a man}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{C}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{O})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{C} ~+\!\!,~ \mathrm{I} ~+\!\!,~ \mathrm{J} ~+\!\!,~ \mathrm{O})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{C} ~+\!\!,~ \mathrm{O}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathrm{w},\!\mathrm{n}

| |

| − | & = &

| |

| − | \text{woman that is noble}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{B}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{E})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{C} ~+\!\!,~ \mathrm{D} ~+\!\!,~ \mathrm{O})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{D}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathrm{n},\!\mathrm{w}

| |

| − | & = &

| |

| − | \text{noble that is a woman}

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | (\mathrm{C}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{O})

| |

| − | \\

| |

| − | & &

| |

| − | \times

| |

| − | \\

| |

| − | & &

| |

| − | (\mathrm{B} ~+\!\!,~ \mathrm{D} ~+\!\!,~ \mathrm{E})

| |

| − | \\[6pt]

| |

| − | & = &

| |

| − | \mathrm{D}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | ==Selection 9==

| |

| − |

| |

| − | ===The Signs for Multiplication (cont.)===

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%" <!--QUOTE-->

| |

| − | |

| |

| − | <p>It is obvious that multiplication into a multiplicand indicated by a comma is commutative<sup>1</sup>, that is,</p>

| |

| − | |-

| |

| − | | align="center" | <math>\mathit{s},\!\mathit{l} ~=~ \mathit{l},\!\mathit{s}</math>

| |

| − | |-

| |

| − | |

| |

| − | <p>This multiplication is effectively the same as that of Boole in his logical calculus. Boole's unity is my <math>\mathbf{1},</math> that is, it denotes whatever is.</p>

| |

| − |

| |

| − | #<p>It will often be convenient to speak of the whole operation of affixing a comma and then multiplying as a commutative multiplication, the sign for which is the comma. But though this is allowable, we shall fall into confusion at once if we ever forget that in point of fact it is not a different multiplication, only it is multiplication by a relative whose meaning — or rather whose syntax — has been slightly altered; and that the comma is really the sign of this modification of the foregoing term.</p>

| |

| − |

| |

| − | <p>(Peirce, CP 3.74).</p>

| |

| − | |}

| |

| − |

| |

| − | ===Commentary Note 9.1===

| |

| − |

| |

| − | Let us backtrack a few years, and consider how George Boole explained his twin conceptions of ''selective operations'' and ''selective symbols''.

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%" <!--QUOTE-->

| |

| − | |

| |

| − | <p>Let us then suppose that the universe of our discourse is the actual universe, so that words are to be used in the full extent of their meaning, and let us consider the two mental operations implied by the words “white” and “men”. The word “men” implies the operation of selecting in thought from its subject, the universe, all men; and the resulting conception, ''men'', becomes the subject of the next operation. The operation implied by the word “white” is that of selecting from its subject, “men”, all of that class which are white. The final resulting conception is that of “white men”.</p>

| |

| − |

| |

| − | <p>Now it is perfectly apparent that if the operations above described had been performed in a converse order, the result would have been the same. Whether we begin by forming the conception of “''men''”, and then by a second intellectual act limit that conception to “white men”, or whether we begin by forming the conception of “white objects”, and then limit it to such of that class as are “men”, is perfectly indifferent so far as the result is concerned. It is obvious that the order of the mental processes would be equally indifferent if for the words “white” and “men” we substituted any other descriptive or appellative terms whatever, provided only that their meaning was fixed and absolute. And thus the indifference of the order of two successive acts of the faculty of Conception, the one of which furnishes the subject upon which the other is supposed to operate, is a general condition of the exercise of that faculty. It is a law of the mind, and it is the real origin of that law of the literal symbols of Logic which constitutes its formal expression (1) Chap. II, [ namely, <math>xy = yx~\!</math> ].</p>

| |

| − |

| |

| − | <p>It is equally clear that the mental operation above described is of such a nature that its effect is not altered by repetition. Suppose that by a definite act of conception the attention has been fixed upon men, and that by another exercise of the same faculty we limit it to those of the race who are white. Then any further repetition of the latter mental act, by which the attention is limited to white objects, does not in any way modify the conception arrived at, viz., that of white men. This is also an example of a general law of the mind, and it has its formal expression in the law ((2) Chap. II) of the literal symbols [ namely, <math>x^2 = x\!</math> ].</p>

| |

| − |

| |

| − | <p>(Boole, ''Laws of Thought'', 44–45).</p>

| |

| − | |}

| |

| − |

| |

| − | ===Commentary Note 9.2===

| |

| − |

| |

| − | In setting up his discussion of selective operations and their corresponding selective symbols, Boole writes this:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%" <!--QUOTE-->

| |

| − | |

| |

| − | <p>The operation which we really perform is one of ''selection according to a prescribed principle or idea''. To what faculties of the mind such an operation would be referred, according to the received classification of its powers, it is not important to inquire, but I suppose that it would be considered as dependent upon the two faculties of Conception or Imagination, and Attention. To the one of these faculties might be referred the formation of the general conception; to the other the fixing of the mental regard upon those individuals within the prescribed universe of discourse which answer to the conception. If, however, as seems not improbable, the power of Attention is nothing more than the power of continuing the exercise of any other faculty of the mind, we might properly regard the whole of the mental process above described as referrible to the mental faculty of Imagination or Conception, the first step of the process being the conception of the Universe itself, and each succeeding step limiting in a definite manner the conception thus formed. Adopting this view, I shall describe each such step, or any definite combination of such steps, as a ''definite act of conception''.</p>

| |

| − |

| |

| − | <p>(Boole, ''Laws of Thought'', 43).</p>

| |

| − | |}

| |

| − |

| |

| − | ===Commentary Note 9.3===

| |

| − |

| |

| − | In algebra, an ''idempotent element'' <math>x\!</math> is one that obeys the ''idempotent law'', that is, it satisfies the equation <math>xx = x.\!</math> Under most circumstances, it is usual to write this as <math>x^2 = x.\!</math>

| |

| − |

| |

| − | If the algebraic system in question falls under the additional laws that are necessary to carry out the requisite transformations, then <math>x^2 = x\!</math> is convertible into <math>x - x^2 = 0,\!</math> and this into <math>x(1 - x) = 0.\!</math>

| |

| − |

| |

| − | If the algebraic system in question happens to be a boolean algebra, then the equation <math>x(1 - x) = 0\!</math> says that <math>x \land \lnot x</math> is identically false, in effect, a statement of the classical principle of non-contradiction.

| |

| − |

| |

| − | We have already seen how Boole found rationales for the commutative law and the idempotent law by contemplating the properties of ''selective operations''.

| |

| − |

| |

| − | It is time to bring these threads together, which we can do by considering the so-called ''idempotent representation'' of sets. This will give us one of the best ways to understand the significance that Boole attached to selective operations. It will also link up with the statements that Peirce makes about his adicity-augmenting comma operation.

| |

| − |

| |

| − | ===Commentary Note 9.4===

| |

| − |

| |

| − | Boole rationalized the properties of what we now call ''boolean multiplication'', roughly equivalent to logical conjunction, in terms of the laws that apply to selective operations. Peirce, in his turn, taking a very significant step of analysis that has seldom been recognized for what it would lead to, does not consider this multiplication to be a fundamental operation, but derives it as a by-product of relative multiplication by a comma relative. Thus, Peirce makes logical conjunction a special case of relative composition.

| |

| − |

| |

| − | This opens up a very wide field of investigation, ''the operational significance of logical terms'', one might say, but it will be best to advance bit by bit, and to lean on simple examples.

| |

| − |

| |

| − | Back to Venice, and the close-knit party of absolutes and relatives that we were entertaining when last we were there.

| |

| − |

| |

| − | Here is the list of absolute terms that we were considering before, to which I have thrown in <math>\mathbf{1},</math> the universe of ''anything'', just for good measure:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{17}{l}}

| |

| − | \mathbf{1}

| |

| − | & = & \text{anything}

| |

| − | & = & \mathrm{B}

| |

| − | & +\!\!, & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{m}

| |

| − | & = & \text{man}

| |

| − | & = & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{I}

| |

| − | & +\!\!, & \mathrm{J}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{n}

| |

| − | & = & \text{noble}

| |

| − | & = & \mathrm{C}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{w}

| |

| − | & = & \text{woman}

| |

| − | & = & \mathrm{B}

| |

| − | & +\!\!, & \mathrm{D}

| |

| − | & +\!\!, & \mathrm{E}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Here is the list of ''comma inflexions'' or ''diagonal extensions'' of these terms:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathbf{1,}

| |

| − | & = & \text{anything that is}\, \underline{~~ ~~}

| |

| − | \\[6pt]

| |

| − | & = & \mathrm{B}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{C}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{E} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{J} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{O}

| |

| − | \\[9pt]

| |

| − | \mathrm{m,}

| |

| − | & = & \text{man that is}\, \underline{~~ ~~}

| |

| − | \\[6pt]

| |

| − | & = & \mathrm{C}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{J} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{O}

| |

| − | \\[9pt]

| |

| − | \mathrm{n,}

| |

| − | & = & \text{noble that is}\, \underline{~~ ~~}

| |

| − | \\[6pt]

| |

| − | & = & \mathrm{C}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{O}

| |

| − | \\[9pt]

| |

| − | \mathrm{w,}

| |

| − | & = & \text{woman that is}\, \underline{~~ ~~}

| |

| − | \\[6pt]

| |

| − | & = & \mathrm{B}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{E}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | One observes that the diagonal extension of <math>\mathbf{1}</math> is the same thing as the identity relation <math>\mathit{1}.\!</math>

| |

| − |

| |

| − | Working within our smaller sample of absolute terms, we have already computed the sorts of products that apply the diagonal extension of an absolute term to another absolute term, for instance, these products:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lllll}

| |

| − | \mathrm{m},\!\mathrm{n}

| |

| − | & = & \text{man that is noble}

| |

| − | & = & \mathrm{C} ~+\!\!,~ \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{n},\!\mathrm{m}

| |

| − | & = & \text{noble that is a man}

| |

| − | & = & \mathrm{C} ~+\!\!,~ \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{w},\!\mathrm{n}

| |

| − | & = & \text{woman that is noble}

| |

| − | & = & \mathrm{D}

| |

| − | \\[6pt]

| |

| − | \mathrm{n},\!\mathrm{w}

| |

| − | & = & \text{noble that is a woman}

| |

| − | & = & \mathrm{D}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | This exercise gave us a bit of practical insight into why the commutative law holds for logical conjunction.

| |

| − |

| |

| − | Further insight into the laws that govern this realm of logic, and the underlying reasons why they apply, might be gained by systematically working through the whole variety of different products that are generated by the operational means in sight, namely, the products indicated by <math>\{\mathbf{1}, \mathrm{m}, \mathrm{n}, \mathrm{w} \} , \{\mathbf{1}, \mathrm{m}, \mathrm{n}, \mathrm{w} \}.</math>

| |

| − |

| |

| − | But before we try to explore this territory more systematically, let us equip our intuitions with the forms of graphical and matrical representation that served us so well in our previous adventures.

| |

| − |

| |

| − | ===Commentary Note 9.5===

| |

| − |

| |

| − | Peirce's comma operation, in its application to an absolute term, is tantamount to the representation of that term's denotation as an idempotent transformation, which is commonly represented as a diagonal matrix. Hence the alternate name, ''diagonal extension''.

| |

| − |

| |

| − | An idempotent element <math>x\!</math> is given by the abstract condition that <math>xx = x,\!</math> but elements like these are commonly encountered in more concrete circumstances, acting as operators or transformations on other sets or spaces, and in that action they will often be represented as matrices of coefficients.

| |

| − |

| |

| − | Let's see how this looks in the matrix and graph pictures of absolute and relative terms:

| |

| − |

| |

| − | ====Absolute Terms====

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{*{17}{l}}

| |

| − | \mathbf{1} & = & \text{anything} & = &

| |

| − | \mathrm{B} & +\!\!, &

| |

| − | \mathrm{C} & +\!\!, &

| |

| − | \mathrm{D} & +\!\!, &

| |

| − | \mathrm{E} & +\!\!, &

| |

| − | \mathrm{I} & +\!\!, &

| |

| − | \mathrm{J} & +\!\!, &

| |

| − | \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{m} & = & \text{man} & = &

| |

| − | \mathrm{C} & +\!\!, &

| |

| − | \mathrm{I} & +\!\!, &

| |

| − | \mathrm{J} & +\!\!, &

| |

| − | \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{n} & = & \text{noble} & = &

| |

| − | \mathrm{C} & +\!\!, &

| |

| − | \mathrm{D} & +\!\!, &

| |

| − | \mathrm{O}

| |

| − | \\[6pt]

| |

| − | \mathrm{w} & = & \text{woman} & = &

| |

| − | \mathrm{B} & +\!\!, &

| |

| − | \mathrm{D} & +\!\!, &

| |

| − | \mathrm{E}

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

| − | Previously, we represented absolute terms as column arrays. The above four terms are given by the columns of the following table:

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{c|cccc}

| |

| − | \text{ } & \mathbf{1} & \mathrm{m} & \mathrm{n} & \mathrm{w} \\

| |

| − | \text{---} & \text{---} & \text{---} & \text{---} & \text{---} \\

| |

| − | \mathrm{B} & 1 & 0 & 0 & 1 \\

| |

| − | \mathrm{C} & 1 & 1 & 1 & 0 \\

| |

| − | \mathrm{D} & 1 & 0 & 1 & 1 \\

| |

| − | \mathrm{E} & 1 & 0 & 0 & 1 \\

| |

| − | \mathrm{I} & 1 & 1 & 0 & 0 \\

| |

| − | \mathrm{J} & 1 & 1 & 0 & 0 \\

| |

| − | \mathrm{O} & 1 & 1 & 1 & 0

| |

| − | \end{array}</math>

| |

| − | |}

| |

| − |

| |

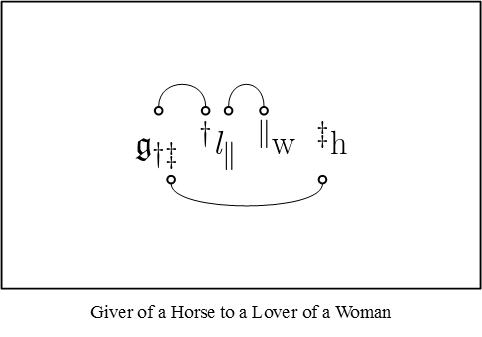

| − | The types of graphs known as ''bigraphs'' or ''bipartite graphs'' can be used to picture simple relative terms, dyadic relations, and their corresponding logical matrices. One way to bring absolute terms and their corresponding sets of individuals into the bigraph picture is to mark the nodes in some way, for example, hollow nodes for non-members and filled nodes for members of the indicated set, as shown below:

| |

| − |

| |

| − | {| align="center" cellpadding="10" width="90%"

| |

| − | | [[Image:LOR 1870 Figure 4.1.jpg]] || (4.1)

| |

| − | |-

| |

| − | | [[Image:LOR 1870 Figure 4.2.jpg]] || (4.2)

| |

| − | |-

| |

| − | | [[Image:LOR 1870 Figure 4.3.jpg]] || (4.3)

| |

| − | |-

| |

| − | | [[Image:LOR 1870 Figure 4.4.jpg]] || (4.4)

| |

| − | |}

| |

| − |

| |

| − | ====Diagonal Extensions====

| |

| − |

| |

| − | {| align="center" cellspacing="6" width="90%"

| |

| − | |

| |

| − | <math>\begin{array}{lll}

| |

| − | \mathbf{1,}

| |

| − | & = & \text{anything that is}\, \underline{~~ ~~}

| |

| − | \\[6pt]

| |

| − | & = & \mathrm{B}\!:\!\mathrm{B} ~+\!\!,~ \mathrm{C}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{E}\!:\!\mathrm{E} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{J} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{O}

| |

| − | \\[9pt]

| |

| − | \mathrm{m,}

| |

| − | & = & \text{man that is}\, \underline{~~ ~~}

| |

| − | \\[6pt]

| |

| − | & = & \mathrm{C}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{I}\!:\!\mathrm{I} ~+\!\!,~ \mathrm{J}\!:\!\mathrm{J} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{O}

| |

| − | \\[9pt]

| |

| − | \mathrm{n,}

| |

| − | & = & \text{noble that is}\, \underline{~~ ~~}

| |

| − | \\[6pt]

| |

| − | & = & \mathrm{C}\!:\!\mathrm{C} ~+\!\!,~ \mathrm{D}\!:\!\mathrm{D} ~+\!\!,~ \mathrm{O}\!:\!\mathrm{O}

| |

| − | \\[9pt]

| |

| − | \mathrm{w,}

| |